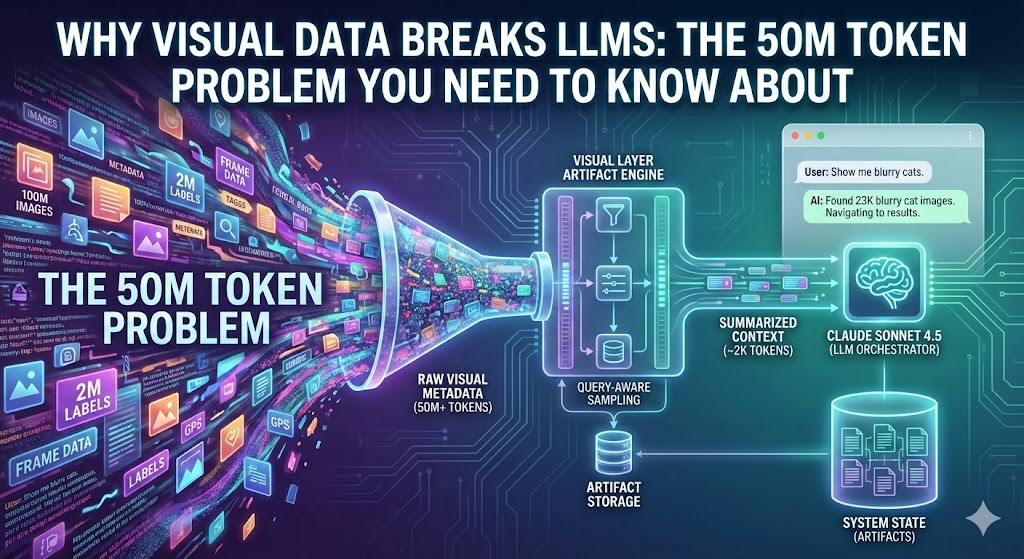

At Visual Layer, we specialize in empowering AI and data teams to seamlessly organize, explore, enrich, and extract valuable insights from massive collections of unstructured image and video data. Our platform includes advanced tools for streamlining data curation pipelines and enhancing model performance with features such as smart clustering, quality analysis, semantic search, and visual search. One key aspect of our technology is the ability to enrich visual datasets with object tags, captions, bounding boxes, and other quality insights. Today, we’re providing FREE enriched versions of a selection of well-known academic datasets, adding to them valuable information to help ML researchers and practitioners improve their models.

Enriched ImageNet

Our Imagenet-1K-VL-Enriched dataset adds an additional layer of information to the original ImageNet-1K dataset. With enriched captions, bounding boxes, and label issues, this dataset opens up new possibilities for a variety of machine learning tasks, from image retrieval to visual question answering.

Dataset Overview

This enriched version of ImageNet-1K consists of six columns:

- image_id: The original image filename.

- image: Image data in PIL Image format.

- label: Original label from the ImageNet-1K dataset.

- label_bbox_enriched: Bounding box information, including coordinates, confidence scores, and labels generated using object detection models.

- caption_enriched: Captions generated using the BLIP2 captioning model.

- issues: Identified quality issues (e.g., duplicates, mislabels, outliers).

How to Use the Dataset

The enriched ImageNet dataset is available via the Hugging Face Datasets library:

import datasetsds = datasets.load_dataset("visual-layer/imagenet-1k-vl-enriched")You can also interactively explore this dataset with our visualization platform. Check it out here. It’s free, and no sign-up is required.

Enriching the COCO 2014 Dataset

The COCO-2014-VL-Enriched dataset is our enhanced version of the popular COCO 2014 dataset, now featuring quality insights. This version introduces a new level of dataset curation by identifying and highlighting issues like duplicates, mislabeling, outliers, and suboptimal images (e.g., dark, blurry, or overly bright).

Dataset Overview

The enriched COCO 2014 dataset now includes six columns:

- image_id: The original image filename from the COCO dataset.

- image: Image data in the form of PIL Image.

- label_bbox: Original bounding box annotations, along with enriched information such as confidence scores and labels generated using object detection models.

- issues: Identified quality issues, such as duplicate, mislabeled, dark, blurry, bright, and outlier images.

How to Use the Dataset

You can easily access this dataset using the Hugging Face Datasets library:

import datasetsds = datasets.load_dataset("visual-layer/coco-2014-vl-enriched")This dataset is also available in our free no-sign-up required visualization platform. Check it out here.

Additional Enriched Datasets

In addition to COCO 2014 and ImageNet-1K, we’ve enriched several other widely used datasets and made them available on Hugging Face:

- Fashion Mnist: https://huggingface.co/datasets/visual-layer/fashion-mnist-vl-enriched

- Food 101: https://huggingface.co/datasets/visual-layer/food101-vl-enriched

- Cifar10: https://huggingface.co/datasets/visual-layer/cifar10-vl-enriched

- Oxford Flowers: https://huggingface.co/datasets/visual-layer/oxford-flowers-vl-enriched

- Mnist: https://huggingface.co/datasets/visual-layer/mnist-vl-enriched

- Human Action Recognition: https://huggingface.co/datasets/visual-layer/human-action-recognition-vl-enriched

Explore the Datasets

Visual Layer offers a no sign-up required platform for interactively visualizing these datasets. You can explore each dataset, identify quality issues, and get hands-on experience with our enrichment capabilities. Take a look here: https://app.visual-layer.com/datasets