TL;DR

- Massive amounts of visual data are underutilized by defense and intelligence organizations, overwhelmed by a torrent of new content every hour. Visual AI for defense is crucial to address this; those not strategically adopting will be left behind.

- Without AI-native platforms for data curation and unified workflows, teams face significant challenges, including delayed threat detection and operational friction. Relying on legacy manual reviews and fragile homegrown tools proves unsustainable, as they lack the scalability and semantic search capabilities necessary to keep pace with modern demands.

From Data Chaos to Actionable Intelligence

Defense and intelligence teams are navigating a deluge of visuals - images, videos and more. Petabytes of data from drones, CCTV, body cameras, and open-source feeds flood operations centers daily. Within this chaos lie the mission-critical clues that inform life-or-death decisions.

Yet, the sheer volume makes timely analysis nearly impossible, dangerously increasing the risk that key indicators are missed. These failures aren't just data points; they compromise operational readiness, jeopardize public safety, and weaken national defense.

The Downstream Impact of a Capability Gap

Without the right infrastructure, organizations are trapped in outdated workflows that drive up costs while degrading mission success. These problems compound, systematically eroding confidence and capability. Teams shift from strategic foresight to reactive firefighting as decision-makers lose trust in inconsistent data. Operational risk climbs—not because threats are more complex, but because the systems designed to provide clarity have failed to evolve.

This failure creates a cascade of challenges:

- Missed critical threats because teams lack the ability to perform semantic search across petabytes of footage. This leaves them blind to emerging threats. Critical intelligence surfaces far too late, as vast portions of data remain unindexed and fundamentally unknown.

- Error-prone manual reviews because without workflow automation, teams are forced to rely on manual processes. These processes are inherently inconsistent due to varied labeling standards, human error, and fatigue. This ultimately erodes trust and creates systemic blind spots.

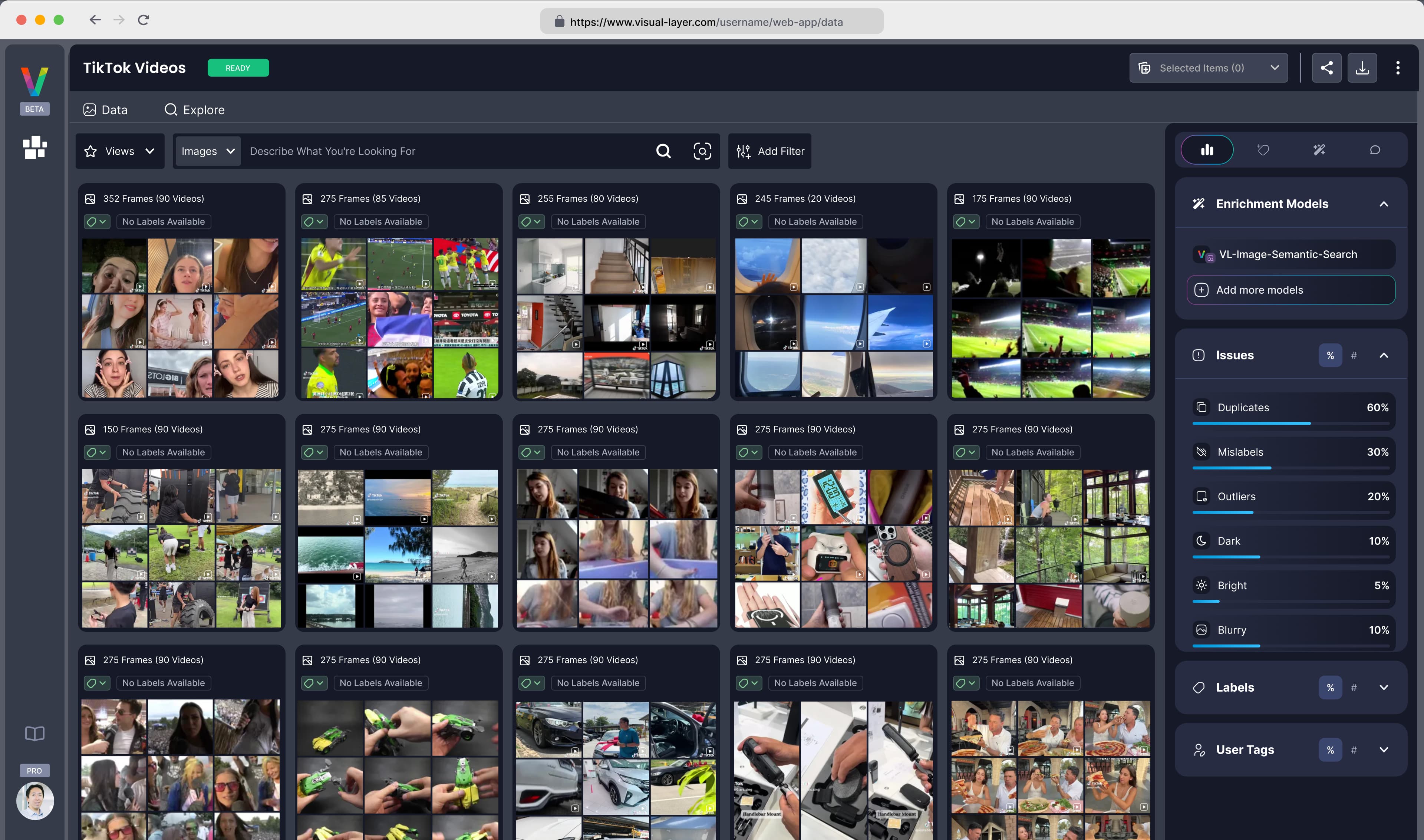

- Poor AI predictions because without automated data curation tools, AI vision and LLM models for defense are trained on noisy data. The lack of filtering for duplicates, mislabels, and blurriness leads to unreliable outputs and wastes valuable resources.

- Drained budgets because without elastic infrastructure designed for visual data, homegrown tools that can’t scale create significant technical debt. As a result, funds are diverted from innovation to mere maintenance due to high compute costs and excessive manual labor.

- Slow, siloed workflows because without a unified platform, teams are forced into fragmented operations using incompatible tools. This prevents intelligence from flowing seamlessly between units, severely limiting the ability to act cohesively.

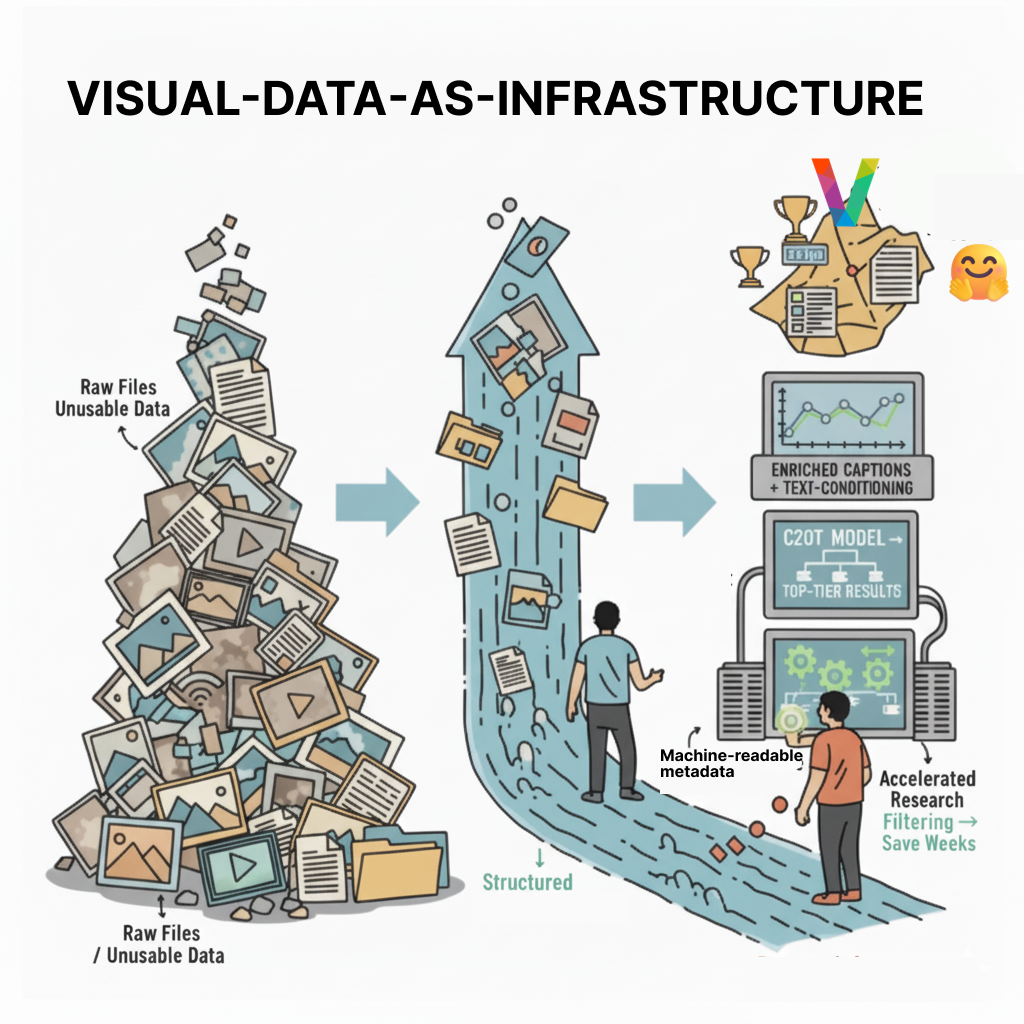

The solution begins with reimagining visual data not as static files to be archived, but as live intelligence to be harnessed. Leading organizations understand that this data demands rapid processing, intelligent filtering, and on-demand accessibility.

By embracing scalable, AI-native visual workflows, they gain the operational speed and analytical precision modern threats demand.

Why Legacy Approaches Fall Short

The Limits of Manual Review

Manual review, while a valuable process for detailed validation, cannot keep pace with today's flood of visual data. It is inherently slow, prone to human error, and inconsistent across teams and time.

Relying on it as a primary method of triage guarantees that teams will always be behind, reacting to events long after they’ve occurred and leaving vast quantities of data completely untouched.

The Hidden Costs of Homegrown Systems

In an attempt to cope, many organizations turn to custom, homegrown solutions. These systems initially feel empowering, but over time, they often become dangerously fragile, collapsing under the weight of one-off scripts and delicate integrations.

As performance bottlenecks become routine and key personnel leave, the intricate knowledge needed to maintain these systems vanishes. This results in continuous reactive problem-solving, stalled innovation, and a dangerous dependency on systems that cannot scale reliably.

A New Age for Visual Intelligence

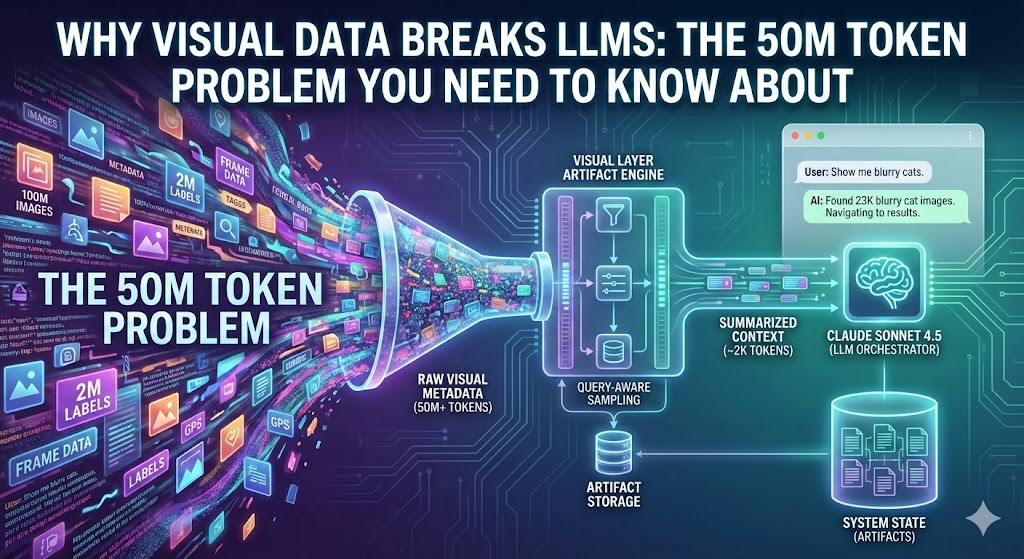

We’ve seen what large language models (LLMs) did for text. Visual data, however, is several orders of magnitude more complex—more nuanced, more ambiguous, and far more consequential in defense settings.

The truth is clear: if you cannot find, understand, and act on your data, it holds no value.

There is a better approach. Leading organizations are transitioning to AI-native solutions designed explicitly for visual data triage, search, and management at operational scale, becoming true ISR platforms.

The ideal system is a force multiplier—one that provides confidence, not just features. It processes petabytes of footage in hours, not weeks. It surfaces threats at mission speed, providing insights while they are still relevant.

Such a platform enables the training of highly accurate models on a foundation of high-integrity data. It also reduces infrastructure costs through flexible and elastic deployments. Most importantly, it unifies technical and non-technical teams around a shared, interactive context, ensuring everyone is aligned.

Capabilities for Mission Success

To meet the evolving demands of modern defense and intelligence, organizations require a new class of capabilities designed specifically for visual data. These capabilities must move beyond the limitations of legacy systems and provide a robust foundation for actionable intelligence.

- Process Petabytes with Unprecedented Speed: Effective systems allow organizations to process massive datasets and extract relevant insights in a fraction of the time, eliminating bottlenecks and matching the speed of operations.

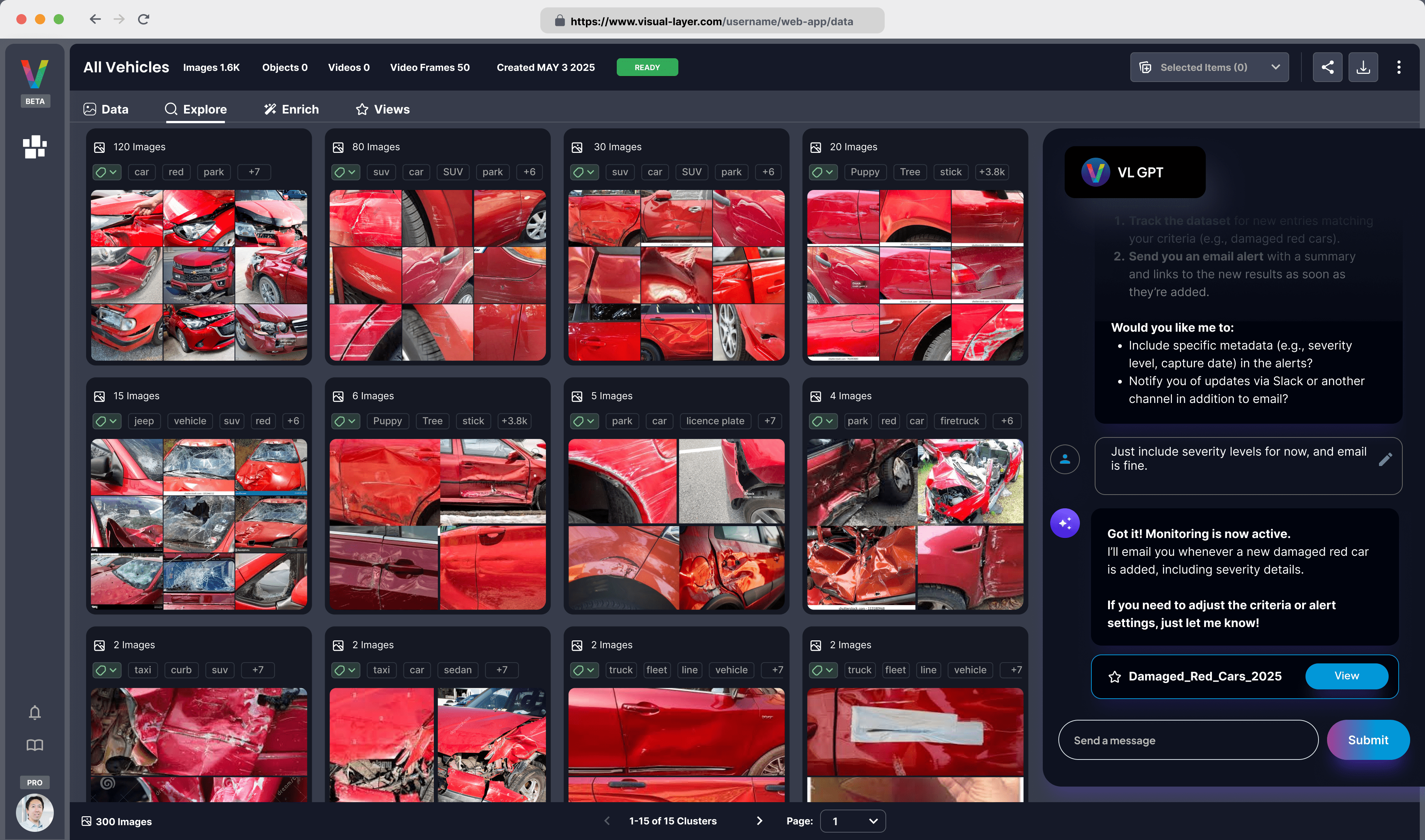

- Surface Threats and Anomalies at Mission Speed: The best systems enable teams to act while an event is still relevant, not long after it has passed, with context-aware alerting that allows for rapid verification and response.

- Build AI on a Foundation of High-Integrity Data: Leading organizations invest in structured datasets with automated quality checks. High-integrity data builds trust in the system and allows models to scale that trust across teams.

- Reduce Infrastructure Costs, Not Capability: Sustainable systems use elastic infrastructure that scales with demand, whether deployed in a secure on-prem, private cloud, or hybrid environment, providing maximum flexibility at a lower cost.

- Unify Technical and Non-Technical Teams: The most effective systems bring everyone into the same visual environment, with interfaces tailored to each role, eliminating confusion and accelerating every decision.

Visual Layer: From Mission Impossible to Mission Success

A national homeland security agency, recognized for its critical role in conflicts like the Iron Swords War, faced a daunting task. The agency was responsible for monitoring hundreds of miles of border, a mission complicated by a massive and overwhelming archive of footage.

From CCTV to drone and body-cam feeds, the volume of data had skyrocketed into petabytes, all poorly indexed and nearly impossible to search effectively. Analysts were bogged down, sifting through endless hours of footage and frequently missing critical details like nighttime crossings or unusual vehicle patterns. Decisions were being made with incomplete information, putting national security at risk.

With Visual Layer, what took weeks of painstaking manual effort now happens in a fraction of the time. The platform rapidly processed and indexed every frame from all surveillance feeds, enriching the data with crucial metadata. The team quickly spotted unauthorized movements, and the platform's exploration tools enabled analysts to uncover intricate behavior patterns across different sensors.

With the noise filtered out and tedious review tasks eliminated, teams redirected their focus toward proactive initiatives. Analysts explored cross-source behavior patterns, operational leads built predictive models, and leadership gained insight into threat trends over time. What started as a firefighting mission became a forward-looking operation—driven by data, not overwhelmed by it.

About Visual Layer

Visual Layer is your AI-enabled partner in visual intelligence, engineered for clarity and impact.

Our platform is built on a graph-based engine for deep situational awareness, learning the multimodal relationships between images, objects, and text. We offer full data control with secure on-prem and private cloud deployments and provide flexible AI integration, including BYOM (Bring Your Own Model).

Visual Layer is the infrastructure that enables AI to deliver operational impact. We empower organizations to:

- Discover Critical Insights Rapidly with semantic and visual search across petabytes of data.

- Predict and Act at Mission Speed by configuring tunable alerts on visual events.

- Build AI on High-Integrity Visual Data by automatically filtering out noise and exporting curated datasets.

- Scale Efficiently with Elastic Infrastructure in secure private cloud, on-prem, or hybrid configurations.

- Unify Teams around a single, collaborative, and highly interactive platform.

What Defense & Intelligence Teams Gain

For defense organizations, Visual Layer equips teams with the speed, transparency, and collaboration they need to stay ahead of the threat landscape.

Adopting Visual Layer provides tangible advantages. It allows for the swift sorting of petabyte-scale data, ensuring vital details stand out. Analysts can uncover compelling patterns and identify anomalies that would otherwise remain hidden, enhancing their GEOINT tools. Tedious processes are transformed through reliable automation, reducing backlogs and enhancing productivity. As a result, teams see decreased operational costs and improved response times, fostering a deep sense of confidence in their mission-critical capabilities.

Ready to Achieve Decisive Advantage?

Are you ready to transform your visual data operations? Request a strategic briefing to explore mission-ready intelligence at any scale, on your terms.