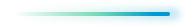

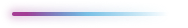

TL;DR: Visual data management systems are lacking in all aspects: storage, quality (deduplication, anomaly detection), search, analytics and visualization. As a consequence, companies and researchers are losing product reliability, working hours, wasted storage, compute and most importantly, the ability to unlock the full potential of their data. To start addressing this we analyzed numerous state-of-the-art computer vision datasets and found that common problems such as corrupted images, outliers, wrong labels, and duplicated images can reach a level of up to 46%!¹

As a first step in solving this problem, we introduce a simple new tool that quickly and accurately detects ALL outliers and duplicates in your dataset. Meet fastdup.

Introduction

A decade after deep learning systems first topped key computer vision (CV) benchmarks, computer vision applications and use cases can be found across all sectors. Many novel use cases are emerging, for example autonomous vehicles, 3D reconstruction of homes from images, robots that perform many different tasks, etc. Globally, there are over 250,000 people in the private sector who list computer vision skills or tools on their Linkedin profiles.

The rise of visual sensors and the availability of AI models that can unlock visual data, have led to an explosion in demand for CV talent and applications. Nevertheless, computer vision applications are still in their infancy. While many companies are collecting visual data, much of that data has yet to be properly utilized or analyzed due to a lack of access to tools and a skills gap.

We’ve spent the last decade building AI models for computer vision applications in manufacturing, automotive and consumer applications. Tools for building models now include high-level libraries (TensorFlow, PyTorch), model hubs (Model Zoo, TensorFlow Hub), and low-code tools (Matroid). In contrast, we believe most tools for storing, analyzing, navigating, and managing visual data still lack features essential for computer vision projects.

In order to understand challenges facing teams tasked with building CV applications and products, we met with leaders at over 120 companies. Our research yielded a few key findings. First, around 90% of the companies work with image data (vs. video data). Even when raw data is in video format, teams still work with images due to the complexity of handling videos at scale. Second, Around 60% of the companies work in the cloud (mostly AWS). Almost all of the teams we spoke with wrote their own custom tools for handling images, including storage, indexing, retrieval, visualization, and debugging. Everyone agrees that there are not enough systems tools for helping out with managing and using visual data.

The findings are well aligned with our experience: data tooling challenges are the key bottlenecks faced by the teams we interviewed. While computer vision models have become easier to build and tune, progress in data infrastructure for CV applications has lagged behind. As a consequence computer vision teams struggle to incorporate basic data management features pertaining to data quality (deduplication, anomaly detection), search, and analytics. A few companies even store images as strings in traditional databases or squeeze them into HDF5 files.

State of Tools for Storing, Managing and Unlocking Visual Data

With tabular data, you can use a traditional database like Oracle or a cloud-based solution like Snowflake or Databricks. Data visualization tools such as Tableau and Looker can help you gain insights from your data. Distributed computing frameworks like Spark or Ray are available to analyze and process tabular data at scale.

Unlike tabular data which are typically represented by strings, ints, floats or lists, image data is more involved. Image data normally contains raw images, plus metadata about the image like photographer name, date of the image, location, etc. Annotations are often used to mark objects and regions of interest in the images. Short feature vectors are commonly used to query and index the data.

In the case of visual data, there is no single database that can be used to query and index this complex information. Raw images are typically stored as flat files, metadata is sometimes stored in a traditional database, and annotations are commonly written in JSON. This makes it difficult to retrieve images, their metadata, annotations and feature vectors without writing code. Teams working on computer vision typically need to write code to gather and process data from multiple sources, some of them local, some of them in the cloud. Even simple tasks like previewing images stored in cloud storage requires writing code. Coding is, of course, required for more complex tasks, like searching for images based on their content and some metadata fields.

This is the reason why large image repositories tend to get noisy over time. It is impossible for humans to oversee millions of images in order to ensure their content meets requirements or find problems with their annotations or metadata. Corrections are made, but versioning is hard to keep track of. A majority of annotations are made by humans, which is also a noisy source of information. Data with noisy annotations tend to result in suboptimal models. All these data management needs are particularly challenging due to the scale and complexity of the computations required.

Even researchers are becoming increasingly concerned with data quality. Deep learning pioneer Andrew Ng has recently been advocating that teams take a data-centric² approach to build AI applications. More specifically, Ng is urging companies to focus on “engineering practices for improving data in ways that are reliable, efficient, and systematic”, vs. the alternative of deeper and more complex machine learning models. Andrej Karpathy, head of AI at Tesla, states that 75% of their computer vision team’s efforts are spent on data cleaning and preparation³ and not enough attention is given for actually looking at the data and gaining insights.

Insights from Key Datasets via Fastdup

We have decided to take a first step in tackling these challenges by writing and sharing a free software tool called fastdup. Before describing fastdup tool we share some of the insights and findings gained when using fastdup on some popular datasets. We experimented with a few dozen large datasets, but as a representative dataset we refer here mainly to the full ImageNet-21K dataset (11.5 million images). Additional results for other datasets can be found in our GitHub repo. Disclaimer: the discussed state-of-the-art datasets were curated by the best computer vision researchers. We do not want to complain about the quality of those datasets but to propose how they can be improved further. We believe the reason we were able to produce those results quickly is that we had a better tool to utilize, a technology that did not exist when those datasets were curated.

Duplicates and Data Leakage in ImageNet-21K

fastdup produced a few interesting findings on the ImageNet-21K dataset. We were surprised to find that there are 1.2M pairs of identical images in ImageNet-21K!⁴ Most of them are exact duplicates which add no information to the data but waste on storage and compute. In addition 104,000 train/val⁵ leaks were identified by comparing similar images across the train and validation subsets. Train/val leaks are cases where the same image appears in both the train and the validation datasets which means the model can memorize the images instead of learning how to generalize.

Graph Analytics for finding Anomalies and Wrong Labels

Regarding annotation quality, for a dataset of millions of images it does not make sense to clean erroneous labels one by one. This is why we used graph clustering to group together similar images and make sure they have confirming labels. Not surprisingly there are many classes of wrong labels. One example is broken images when scraping websites that were missed. For example, 5183 images scraped from flicker are broken. This is an issue since those images have valid labels that confuse the model.

A different type of label issues are clusters of the same object given many different labels. Here is an example showing daisy flower:

Performing graph analytics based on the relations between similar images and their label helps us to identify many issues of wrong annotations.

Other interesting insights we can gain by looking at outlier images. Outliers are either bad images or images appended to the dataset by mistake and can not help with the task that the dataset was collected for. Outlier computation is completely unsupervised (no annotations are needed).

In total, we calculate that around 2M images should be removed from ImageNet-21K dataset to improve the dataset quality.

Introducing Fastdup

fastdup the tool we built to help unlock the above insights from some commonly popular datasets. It works by indexing your visual data into short feature vectors, without the need to upload it all to the cloud. Then, a nearest neighbor graph model is built for finding relations between close images. Lastly, graph analytics is used to gain insights from those relations.

fastdup’s index simplifies a large variety of common computer vision tasks. It can be used to learn normal behavior and find outliers in visual data. fastdup looks for novel information to send for annotation, and surfaces mistakes in annotations and labels, and it can identify test/train leaks (cases where images from the training are mistakenly added to the test set).

fastdup is fast: it is written in C++ and can easily handle tens of millions of images. It is simple to run using a single Python command. It runs in your cloud account or on premise (not need to upload your data to a vendor’s cloud account!). Finally, it is designed to be accurate and to output meaningful insights.

- For the ImageNet21-K dataset of 11.5 million images, end to end computation took 3 hours and cost $5 on a 32 core machine in Google Cloud (this cost can be brought down to $1.5 using spot instances).

- We did not use any GPUs and still achieved a throughput of 1000 images per second (images were resized ahead by the dataset authors).

Call for Action

We would love engaging with anyone passionate about computer vision for helping develop or use fastdup for your visual data needs. Please join our slack channel or reach out to us info@visual-layer.com to discuss further.

[1] https://github.com/visualdatabase/fastdup

[2] https://spectrum.ieee.org/andrew-ng-data-centric-ai

[3] https://open.spotify.com/embed-podcast/episode/0IuwH7eTZ3TQBfU8XsMaRr

[4] (we identified identical images as images with cosine similarity > 0.98 of their respective feature vectors)

[5] Train/val splits are taken from ImageNet-21K Pretraining for the Masses